InfiniteTalk Video to Video ComfyUI workflow for Videcool

The InfiniteTalk Video to Video workflow in Videcool provides a powerful and flexible way to transform videos by applying style changes, character replacements, or creative effects frame-by-frame. Designed for speed, clarity, and creative control, this workflow is served by ComfyUI and uses the InfiniteTalk and WAN 2.1 AI video processing models repackaged for ComfyUI.

What can this ComfyUI workflow do?

In short: Video to video transformation.

This workflow takes an input video and transforms it frame-by-frame using advanced diffusion technology, while preserving motion consistency and temporal coherence. It interprets your reference video and optional text prompt, and outputs a modified video with style changes, character swaps, or other creative transformations. The base AI models are optimized for maintaining video quality and smooth motion across all frames.

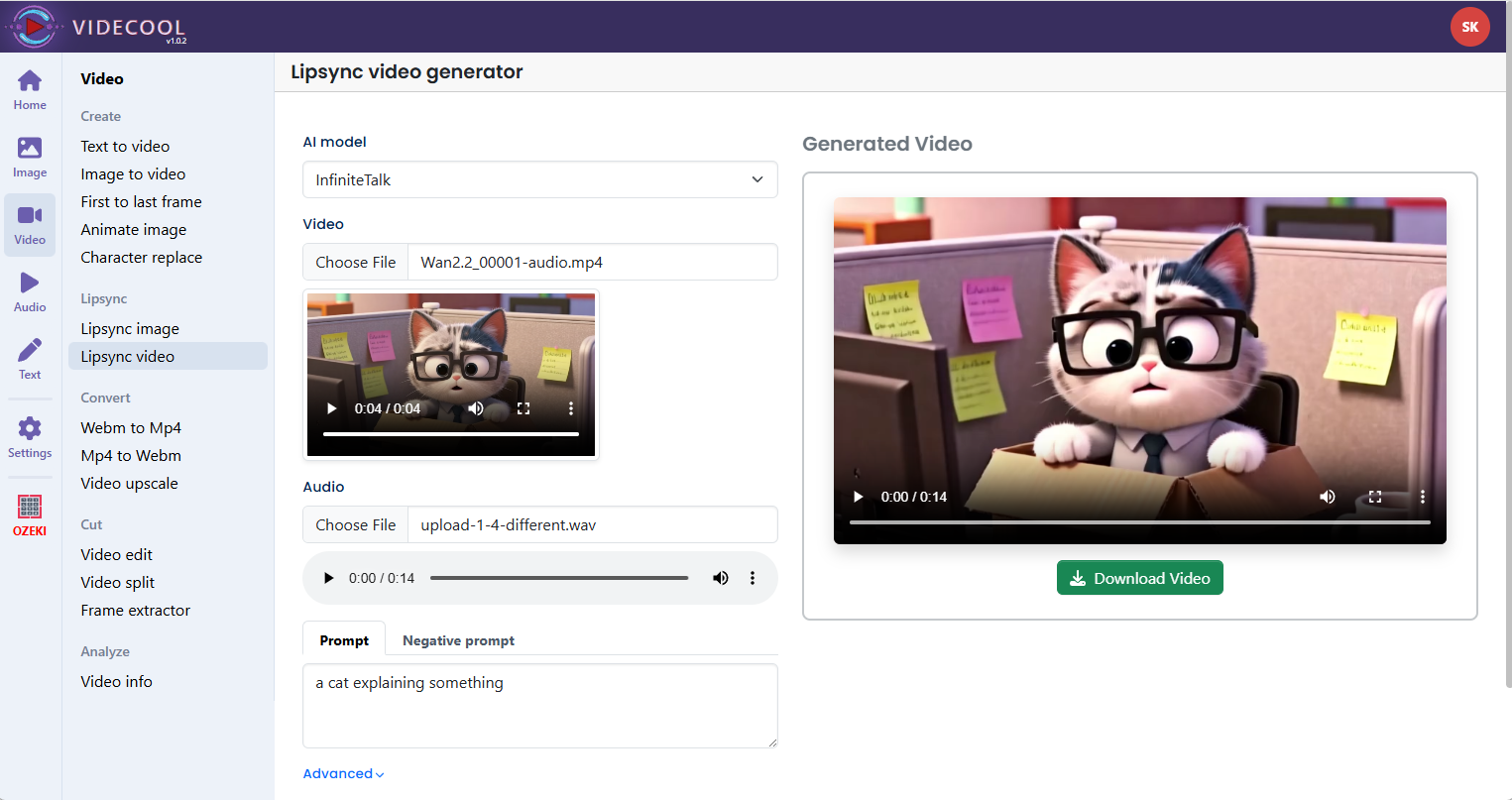

Example usage in Videcool

Download the ComfyUI workflow

Download ComfyUI Workflow file: wanvideo_V2V_InfiniteTalk-api.json

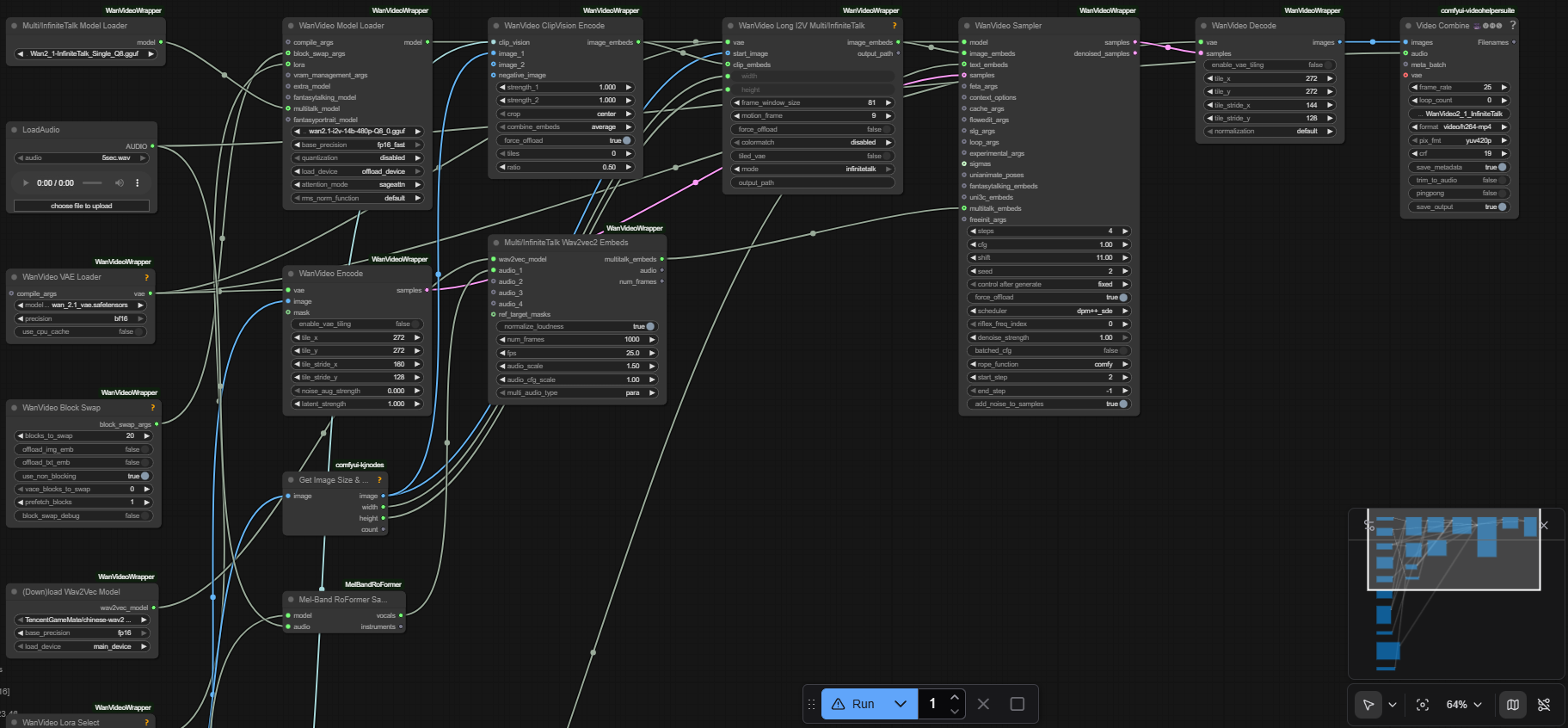

Image of the ComfyUI workflow

This figure provides a visual overview of the workflow layout inside ComfyUI. Each node is placed in logical order to establish a clean and efficient video processing pipeline, starting from video loading through frame-by-frame processing, motion conditioning, sampling, and final video assembly. The structure makes it easy to understand how the video models, text encoders, motion encoders, and audio processing nodes interact. Users can modify or expand parts of the workflow to create custom variations.

Installation steps

Step 1: Download the WAN 2.1 I2V model: cd ComfyUI\models\diffusion_models && wget https://huggingface.co/city96/Wan2.1-I2V-14B-480P-gguf/resolve/main/wan2.1-i2v-14b-480p-Q8_0.ggufStep 2: Download the InfiniteTalk model: cd ComfyUI\models\diffusion_models && wget https://huggingface.co/Kijai/WanVideo_comfy_GGUF/resolve/main/InfiniteTalk/Wan2_1-InfiniteTalk_Single_Q8.gguf

Step 3: Download the MelBandRoFormer audio model: cd ComfyUI\models\diffusion_models && wget https://huggingface.co/Kijai/MelBandRoFormer_comfy/resolve/main/MelBandRoformer_fp16.safetensors

Step 4: Download the UMT5 text encoder: cd ComfyUI\models\text_encoders && wget https://huggingface.co/Kijai/WanVideo_comfy/resolve/main/umt5-xxl-enc-bf16.safetensors

Step 5: Download the CLIP Vision encoder: cd ComfyUI\models\clip_vision && wget https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/clip_vision/clip_vision_h.safetensors

Step 6: Download the LightX2V LoRA: cd ComfyUI\models\loras && wget https://huggingface.co/Kijai/WanVideo_comfy/resolve/main/Lightx2v/lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors

Step 7: Install ComfyUI-WanVideoWrapper: cd ComfyUI/custom_nodes/ && git clone https://github.com/kijai/ComfyUI-WanVideoWrapper && cd ComfyUI-WanVideoWrapper && pip install -r requirements.txt

Step 8: Open ComfyUI Manager → Manage custom nodes → Search "MelBandRoFormer" → Install

Step 9: Open ComfyUI Manager → Manage custom nodes → Search "kjnodes" → Install

Step 10: Open ComfyUI Manager → Manage custom nodes → Search "VideoHelperSuite" → Install

Step 11: Download the wanvideo_V2V_InfiniteTalk-api.json workflow file into your home directory.

Step 12: Restart ComfyUI so all models and custom nodes are properly loaded.

Step 13: Open the ComfyUI graphical user interface (ComfyUI GUI).

Step 14: Load the wanvideo_V2V_InfiniteTalk-api.json workflow in the ComfyUI GUI.

Step 15: Select your input video and optionally enter a text prompt or reference image, then hit run to transform the video.

Installation video

The workflow requires a source video, the InfiniteTalk and WAN 2.1 models, and a few basic parameter adjustments to begin transforming videos. After loading the JSON file, users can select the input video, adjust motion strength, sampling steps, and optional text guidance. Once executed, the models process each frame while maintaining temporal coherence, then assemble the frames back into a final video that can be saved and reused across other Videcool tools.

Prerequisites

To run the workflow correctly, download the following model files and place them into your ComfyUI directory. These files ensure the workflow can process video frames, preserve motion, understand language, and generate consistent output. Proper installation into the following locations is essential before running the workflow: {your ComfyUI director}/models.

ComfyUI\models\diffusion_models\wan2.1-i2v-14b-480p-Q8_0.gguf

https://huggingface.co/city96/Wan2.1-I2V-14B-480P-gguf/resolve/main/wan2.1-i2v-14b-480p-Q8_0.gguf

ComfyUI\models\diffusion_models\Wan2_1-InfiniteTalk_Single_Q8.gguf

https://huggingface.co/Kijai/WanVideo_comfy_GGUF/resolve/main/InfiniteTalk/Wan2_1-InfiniteTalk_Single_Q8.gguf

ComfyUI\models\diffusion_models\MelBandRoformer_fp16.safetensors

https://huggingface.co/Kijai/MelBandRoFormer_comfy/resolve/main/MelBandRoformer_fp16.safetensors

ComfyUI\models\text_encoders\umt5-xxl-enc-bf16.safetensors

https://huggingface.co/Kijai/WanVideo_comfy/resolve/main/umt5-xxl-enc-bf16.safetensors

ComfyUI\models\clip_vision\clip_vision_h.safetensors

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/clip_vision/clip_vision_h.safetensors

ComfyUI\models\loras\lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors

https://huggingface.co/Kijai/WanVideo_comfy/resolve/main/Lightx2v/lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors

How to use this workflow in Videcool

Videcool integrates seamlessly with ComfyUI, allowing users to transform videos directly without managing complex node graphs or custom code. After importing the workflow file into ComfyUI and confirming all model files are installed, simply select your input video, add optional prompts, and click generate. The system handles all backend interactions with ComfyUI and frame processing. This makes video transformation intuitive and accessible, even for users who are not keen on learning how ComfyUI works.

ComfyUI nodes used

This workflow uses the following nodes. Each node performs a specific role, such as loading videos, extracting audio, encoding text and visual features, processing frames through the InfiniteTalk model, and reassembling frames back into a video. Together they create a reliable and modular pipeline that can be easily extended or customized.

- Multi/InfiniteTalk Model Loader

- WanVideo Model Loader

- LoadAudio

- WanVideo Sampler

- WanVideo VAE Loader

- WanVideo Decode

- Video Combine VHS

- WanVideo Block Swap

- (Down)load Wav2Vec Model

- WanVideo Lora Select

- WanVideo Long I2V Multi/InfiniteTalk

- Multi/InfiniteTalk Wav2vec2 Embeds

- Load Video (Upload) VHS

- WanVideo Encode

- WanVideo ClipVision Encode

- Load CLIP Vision

- WanVideo TextEncode Cached

- Get Image Size & Count

- Mel-Band RoFormer Model Loader

- Mel-Band RoFormer Sampler

Video resolution and performance

The InfiniteTalk Video to Video workflow is optimized for 480p video resolution to balance quality and processing time. Higher resolutions require substantially more VRAM and processing time, while lower resolutions may lose fine detail. For best results, use source videos with resolution around 480p, frame rates of 24-30 fps, and duration appropriate for your available GPU memory. Adjusting sampling steps and model precision can help optimize performance on different hardware.

Conclusion

The InfiniteTalk Video to Video workflow is a robust, powerful, and user-friendly solution for transforming videos in Videcool. With its combination of high-quality InfiniteTalk and WAN 2.1 models, a modular ComfyUI pipeline, and seamless platform integration, it enables beginners and professionals alike to produce creative video transformations, style transfers, and character modifications with ease. By understanding the workflow components and installation steps, users can unlock the full potential of AI-assisted video-to-video generation in Videcool.