Ace Steps Audio Generator ComfyUI workflow for Videcool

The Ace Steps Audio Generator workflow in Videcool provides a powerful and flexible way to generate high-quality audio directly from text prompts. Designed for speed, clarity, and creative control, this workflow is served by ComfyUI and uses the ACE-Step AI text-to-audio model developed by Comfy-Org.

What can this ComfyUI workflow do?

In short: Text to audio generation.

This workflow converts written text prompts into fully generated audio tracks using advanced diffusion technology. It interprets your prompt, and outputs detailed, coherent audio with high fidelity. The base AI model it uses is optimized for music and sound generation and can produce audio at various quality levels and sample rates.

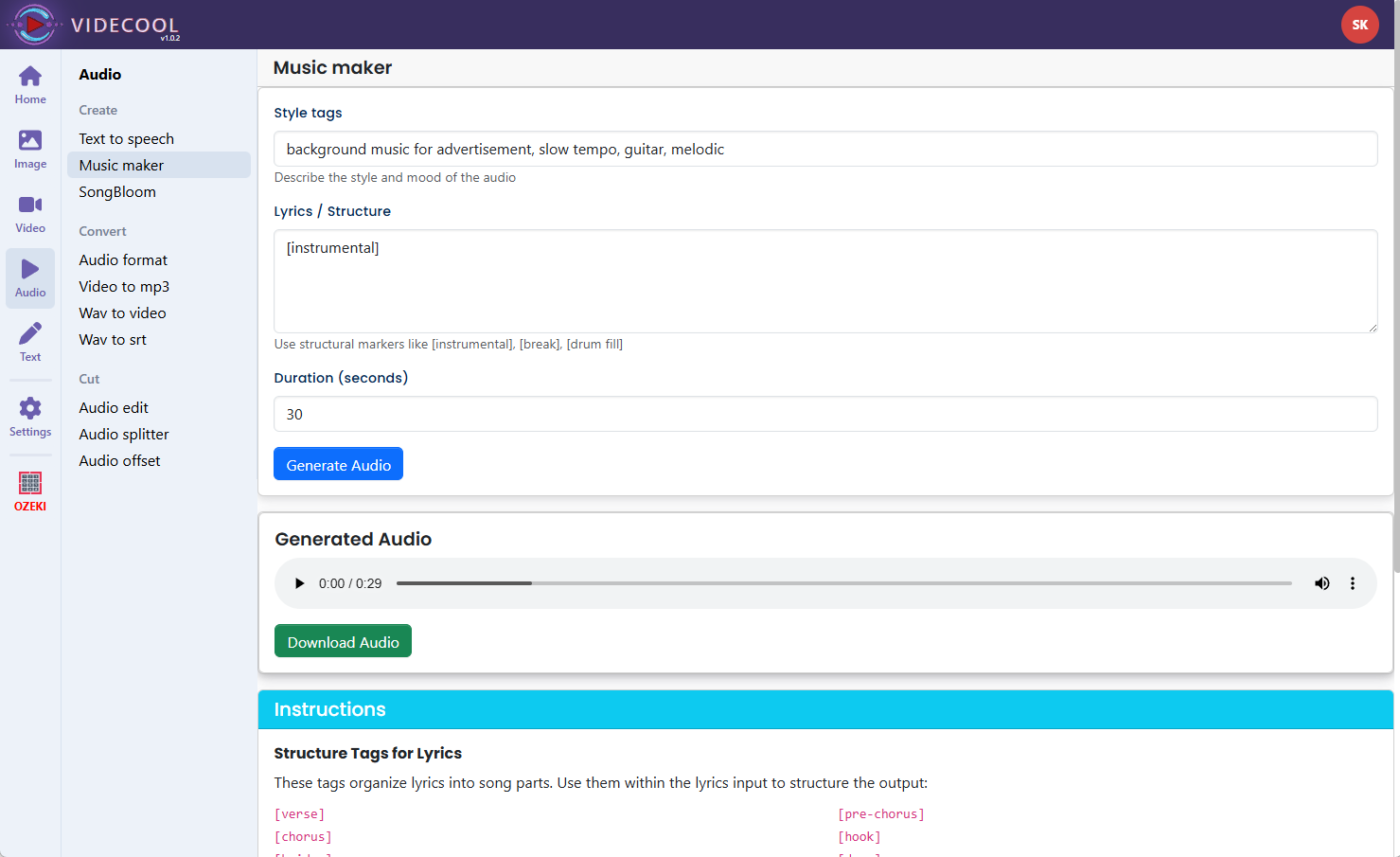

Example usage in Videcool

Download the ComfyUI workflow

Download ComfyUI Workflow file: audio_ace_step_1_t2a_song.json

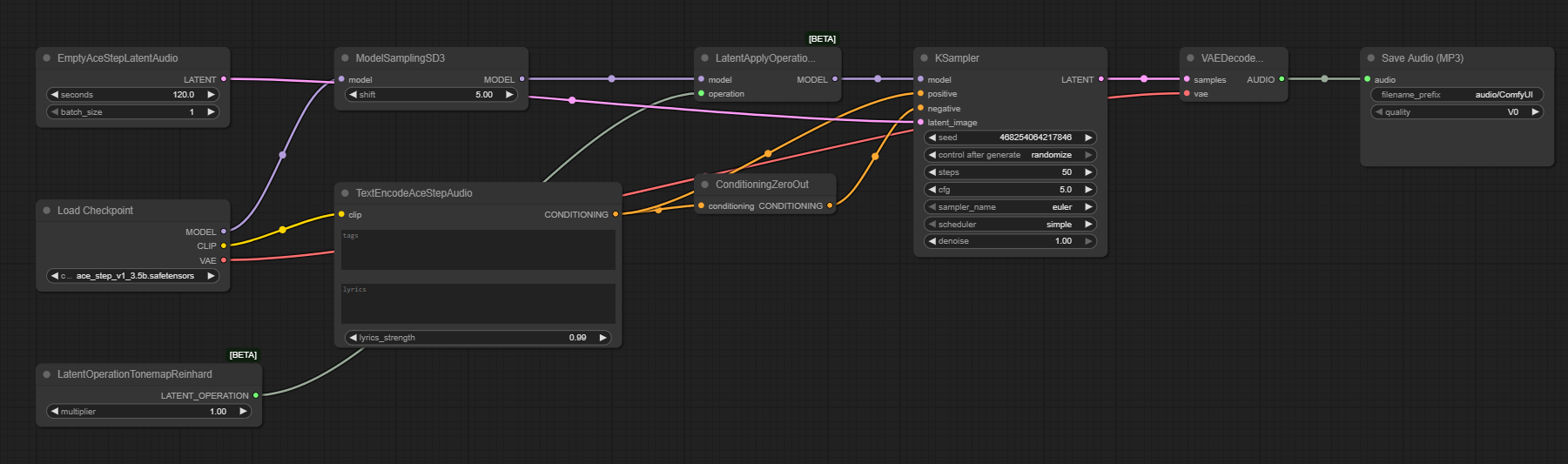

Image of the ComfyUI workflow

This figure provides a visual overview of the workflow layout inside ComfyUI. Each node is placed in logical order to establish a clean and efficient audio generation pipeline. The structure makes it easy to understand how the text encoders, model loader, sampler, and audio VAE decoder interact. Users can modify or expand parts of the workflow to create custom variations and fine-tune audio generation parameters.

Installation steps

Step 1: Download ace_step_v1_3.5b.safetensors into /ComfyUI/models/checkpoints/ace_step_v1_3.5b.safetensors.Step 2: Download the audio_ace_step_1_t2a_song.json workflow file into your home directory.

Step 3: Restart ComfyUI so the new model files are recognized.

Step 4: Open the ComfyUI graphical user interface (ComfyUI GUI).

Step 5: Load the audio_ace_step_1_t2a_song.json workflow in the ComfyUI GUI.

Step 6: Enter a text prompt describing the audio you want to generate into the TextEncodeAceStepAudio node.

Step 7: Adjust sampler steps, guidance scale, and other generation parameters as needed.

Step 8: Hit run to generate the audio track.

Step 9: The generated audio will be saved as an MP3 file by the Save Audio node.

Step 10: Open Videcool in your browser, select the Audio Generator Ace Steps tool, and use the generated audio tracks in your video projects.

Installation video

The workflow requires only a text prompt and a few basic parameter adjustments to begin generating audio. After loading the JSON file, users can select guidance scale, sampling steps, and prompt text. Once executed, the sampler processes the latent audio representation and produces a final decoded audio file. The result can be saved and reused across other Videcool tools. Check out the following video to see the model in action:

Prerequisites

To run the workflow correctly, download the ACE-Step model checkpoint file and place it into your ComfyUI directory. This file contains the audio generation model weights that convert text into latent audio representations and then decode them into finished audio files. Proper installation into the following location is essential before running the workflow: {your ComfyUI director}/models/checkpoints.

ComfyUI\models\checkpoints\ace_step_v1_3.5b.safetensors

https://huggingface.co/Comfy-Org/ACE-Step_ComfyUI_repackaged/resolve/main/all_in_one/ace_step_v1_3.5b.safetensors

How to use this workflow in Videcool

Videcool integrates seamlessly with ComfyUI, allowing users to generate audio directly without external complexity. After importing the workflow file, simply enter your audio prompt and click generate. The system handles all backend interactions with ComfyUI. This makes audio generation intuitive and accessible, even for users who are not keen on learning how ComfyUI works. The following video shows how this model can be used in Videcool:

ComfyUI nodes used

This workflow uses the following nodes. Each node performs a specific role, such as loading the checkpoint, encoding text prompts into audio conditioning, sampling in the audio latent space, applying operations, and finally decoding and saving the audio output. Together they create a reliable and modular pipeline that can be easily extended or customized.

- EmptyAceStepLatentAudio

- Load Checkpoint

- TextEncodeAceStepAudio

- ModelSamplingSD3

- ConditioningZeroOut

- LatentApplyOperationCFG

- KSampler

- LatentOperationTonemapReinhard

- VAE Decode

- Save Audio (MP3)

Base AI model

This workflow is built on the ACE-Step audio generation model, a modern and highly capable diffusion-based text-to-audio generator. ACE-Step provides clarity, coherence, and creative flexibility for audio synthesis, making it suitable for both artistic and commercial use cases. The model benefits from advanced training on diverse audio data and offers consistent results across a variety of musical styles and sound descriptions. More details, model weights, and documentation can be found on the following links:

Hugging Face repository:

https://huggingface.co/Comfy-Org/ACE-Step_ComfyUI_repackaged

Original ACE-Step repository:

https://github.com/aifilab/ACE-Step

Audio quality and parameters

The Ace Steps Audio Generator produces audio at high fidelity with professional quality output. Audio generation quality can be adjusted through sampling steps and guidance scale parameters. Higher sampling steps generally produce more detailed and coherent audio but take longer to generate. The model supports various audio formats and can be configured for different quality levels and sample rates depending on your project requirements.

Conclusion

The Ace Steps Audio Generator ComfyUI workflow is a robust, powerful, and user-friendly solution for generating AI-driven audio in Videcool. With its combination of high-quality models, a modular ComfyUI pipeline, and seamless platform integration, it enables beginners and professionals alike to produce creative and professional-grade audio with ease. By understanding the workflow components and advantages, users can unlock the full potential of AI-assisted audio generation in Videcool.